Testimony of Rosemary Shahan, President,

Consumers for Auto Reliability and Safety

California State Senate Committee on Transportation and Housing

Informational Hearing

February 20, 2018

Mr. Chair and Members, thank you for the opportunity to testify today. I am Rosemary Shahan, President of Consumers for Auto Reliability and Safety, perhaps best-known for initiating California's landmark auto lemon law, which became the model for similar laws enacted in all 50 states. When then-President Reagan's administration abdicated its responsibility to ensure public safety by deregulating the auto industry, and coddled auto manufactures who made seriously defective and unsafe vehicles, the citizens of California and all 50 states rose up and demanded that the manufacturers honor their warranties, and if they fail to fix the defects, they have to buy back the lemon cars.

Since then, CARS has spearheaded enactment of numerous landmark laws to improve protections for Californians and the motoring public nationwide. Our experience gaining passage of landmark safety legislation in a Republican Congress may help shed some light on how federal legislation can work to enhance safety, when responsible members of the auto industry decide to put public safety first.

In 2015, working alongside partners in the rental car industry, we won passage of the federal Raechel and Jacqueline Houck Safe Rental Car Act, sponsored by Senator Schumer and co-sponsored by Senators Boxer and Feinstein, and by Representatives Capps, as well as Members of Congress representing other states, and signed into law by President Obama, as part of the FAST Act. We worked closely with Raechel and Jackie's mother Cally Houck for passage, after her two daughters, ages 20 and 24, were killed by an unrepaired recalled rental car while driving back to Santa Cruz from visiting their parents in Ojai.

At first, the rental car industry opposed the legislation, which for the first time gives the National Highway Traffic Safety Administration the authority to regulate the rental car industry regarding unsafe recalled cars, and prohibits rental car companies with fleets of 35 or more vehicles from renting, loaning or selling unrepaired recalled cars. To their credit, the rental car companies, who are the largest purchasers of new vehicles in North America, changed their position and lobbied Congress with us, asking to be regulated. They had the wisdom and foresight to realize that the public overwhelmingly expects that the vehicles they rent will be safe to drive, and it was in their own interest to make sure that their vehicles are indeed safe. We also had the strong support of the Obama Administration.

Now we are faced with a new challenge, and that is how to regulate the burgeoning industry of developing autonomous vehicles, at a time when the Trump Administration is on a deregulatory binge, and the National Highway Traffic Safety Administration lacks the resources and expertise to do its vitally important job of protecting the American public from the pitfalls of premature deployment of new autonomous vehicle technologies. Unfortunately, the impending lack of federal oversight and the cut-throat competition to be first in offering autonomous vehicles threatens to not only result in unnecessary fatalities and injuries, but it may undermine the public's confidence in the promising new technology that is emerging, and harm the businesses that are placing risky bets in hopes of huge returns.

As the Committee's analysis points out, and as polling has shown, the public is already skeptical about autonomous vehicles. But Washington seems intent on realizing their worst fears.

As the President of Advocates for Highway and Auto Safety, whose Board of Directors includes leading safety and health advocates and representatives of the nation's leading auto insurance companies, recently testified before Congress:

"….the process created in the AV START Act [S 1885] will allow untested and unproven AVs to be sold to the public without appropriate independent or governmental oversight to provide necessary protections to both those in the AVs and those sharing the roads with them. In addition, the AV START Act will potentially allow the sale of hundreds of thousands of AVs that are exempt from existing federal motor vehicle safety standards (FMVSS). In fact, longstanding federal law was recently amended to allow for an unlimited number of vehicles that are not in compliance with FMVSS to be tested on public roads, despite opposition from consumer, public health and safety organizations. This was a massive increase from the previous limit of 2,500 vehicles for most manufacturers… The AV START Act 'takes a wrong turn' by allowing for the sale of potentially millions of AVs to the public without minimum safety standards, without necessary consumer information so that the public understands their capabilities and limitations, and without cybersecurity standards to protect against hackers."

1

As the FBI and other cyber-security experts have warned, the advent of autonomous vehicle technology makes the need for cybersecurity standards to prevent hacking all the more urgent. "According to Patrick Linn, director of the Ethics and Emerging Sciences Group at California Polytechnic University, 'Self-driving cars may enable new crimes that we can't even imagine today.'"

2

"The FBI, in an unclassified report obtained by the Guardian in 2014, voiced concern about how 'game changing' autonomous cars may become for criminals, hackers and terrorists, turning the vehicles into more potentially lethal weapons than they are today."

Unfortunately, with the notable exceptions of Senators such as Blumenthal, Markey, and Feinstein, too many members of Congress appear to be ignoring that stark reality. The message from Washington is that we should trust that the auto manufacturers, which have a long history of engaging in widespread illegal activity, concealing safety defects, failing to report fatalities and injuries, and defrauding the public, this time will somehow change their stripes, and "voluntarily" get it right.

Should we just trust Toyota, which paid $1.2 billion in fines to the US Department of Justice for concealing the sudden acceleration defect, which came to light only after a crash near San Diego that killed a CHP officer, his wife, their 13-year-old daughter, and his brother-in-law?

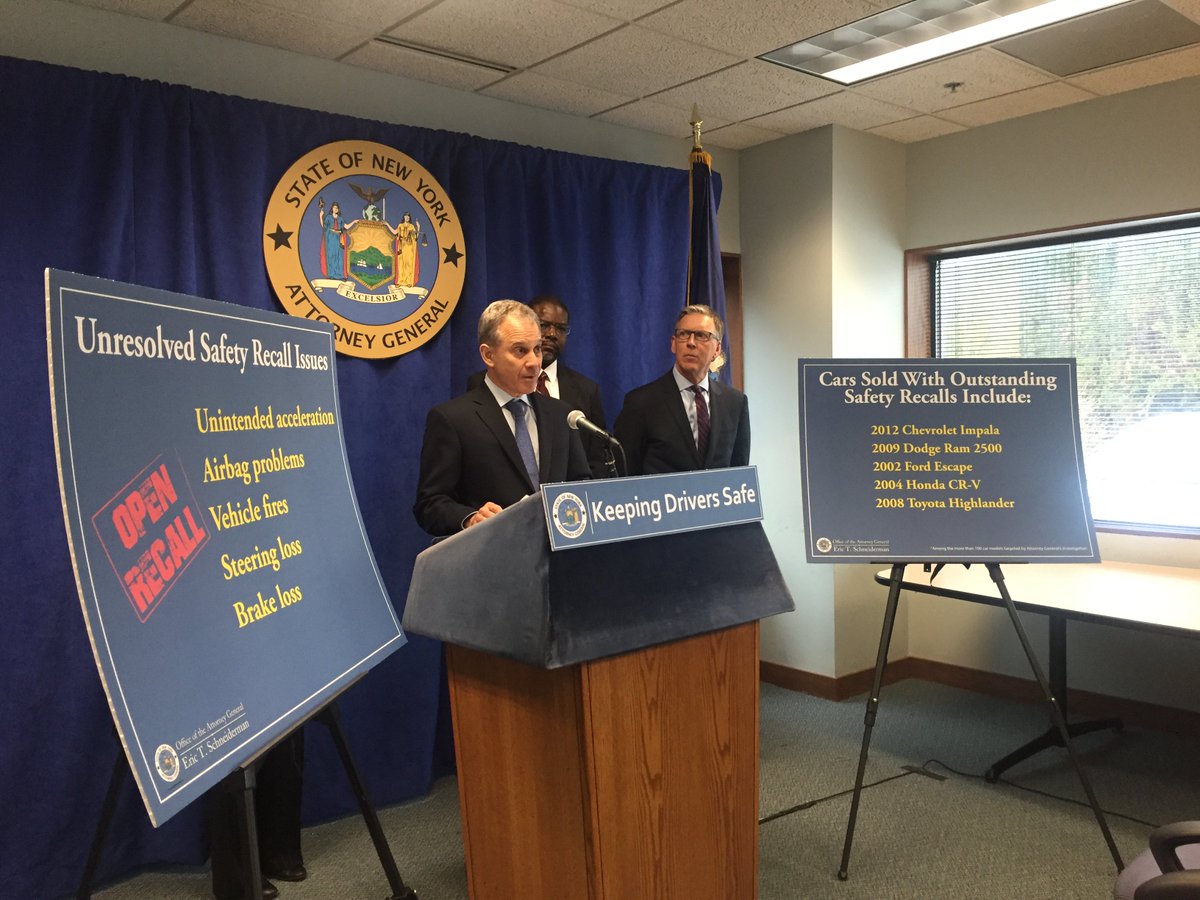

Should we just trust Fiat Chrysler, which paid $105 million in fines, for failing to complete 23 safety recalls covering more than 11 million vehicles?

Should we just trust General Motors, which paid $900 million in fines and was forced to recall over 30 million cars, after it concealed the deadly ignition switch defect for over a decade, leading to at least 174 deaths?

Should we trust Volkswagen, which pleaded guilty to criminal activity in gaming the results of its emissions tests, and was forced to pay billions of dollars in penalties and in refunds to VW owners?

Should we just trust Honda, GM, Toyota, Subaru, Ford, and many other manufacturers which installed ticking time bomb Takata airbags in tens of millions of their vehicles, leading to the largest auto safety recall in U.S history, killing at least 21 people and maiming hundreds of others, causing blindness and other debilitating injuries, and ending with Takata in bankruptcy while tens of millions of angry and frustrated consumers are forced to wait months, or even years, for repair parts?

Should we trust Tesla, which blames the drivers of its "Autopilot" semi-autonomous vehicles for not being sufficiently attentive and braking in time, after they collide with a tractor trailer in Florida or, very recently, a fire truck that was parked on the 405 freeway in Los Angeles? Note: Tesla's owner's manual does warn that the system is ill-equipped to handle this exact sort of situation:

"'Traffic-Aware Cruise Control cannot detect all objects and may not brake/decelerate for stationary vehicles, especially in situations when you are driving over 50 mph (80 km/h) and a vehicle you are following moves out of your driving path and a stationary vehicle or object is in front of you instead.'" 3

Should we trust Uber, which concealed for over year the fact that hackers had stolen personal data, including driver's license numbers and addresses, from 57 million driver and rider accounts, and paid ransom to the hackers, then attempted to make it appear that the hackers were doing them a favor and merely helping de-bug their system? Note: Uber is asserting arbitration in the ensuing lawsuit, further concealing its wrongdoing and the ensuing cover-up.

Should we trust Uber, which advertises that its vehicles are safe, but fails to screen out vehicles with potentially lethal safety recalls?

Should we trust any of the dozen companies that currently test autonomous vehicles in California to sell autonomous vehicles in our state, when they report to the California DMV that last year, in the aggregate, their test fleets had disengagements, when the drivers had to take control in order to drive safely, once every 220 miles?

Of course, the answer to each of these questions is NO. Particularly absent federal safety standards, what California does to protect its citizens is all the more important, both for protecting public safety and to provide for the necessary regulatory framework to ensure that the industry doesn't continue to make the same tragic and preventable mistakes.

Accordingly, CARS opposes federal preemption regarding the regulation of AVs. States should remain free to protect their own citizens. We also oppose allowing manufacturers of AVs to impose forced arbitration, which denies victims recourse in a court of law and allows the concealment of defects, perpetuating fatalities and injuries.

CARS also makes the following recommendations, specifically for adoption in California, that our state should:

- Require a rigorously proven track record of safe operation, without disengagements, in all kinds of traffic conditions and in various weather conditions including fog, rain, heavy smoke, and snow, prior to allowing deployment (sale / leasing / ride sharing)

- Require AVs to pass a "driver's test" proving that they can "see" objects and the driving environment accurately, and avoid collisions, including collisions with stationary objects, prior to allowing them to be deployed / sold to the public

- Prohibit deployments of Level 3-4 vehicles, which rely on human drivers not to become distracted, and to take the wheel at a moment's notice; or are restricted to limited geo-locations or weather conditions

- Mandate full public disclosure / reporting of crashes involving AVs

- Establish reasonable standards for financial stability /insurance prior to sale of AVs to the public (existing law requires only $5 million, which is inadequate to weed out entities that are undercapitalized for purposes of selling vehicles to the public)

- Prohibit misleading terminology and false advertising regarding AVs and their capacity to drive safely

Thank you again for the opportunity to testify. I look forward to any questions you may have.

_______________________________________________________________________________________

Attachments and further resources:

California DMV definition of "disengagement": "A deactivation of the autonomous mode when a failure of the autonomous technology is detected or when the safe operation of the vehicle requires that the autonomous vehicle test driver disengage the autonomous mode and take immediate manual control of the vehicle."

Autonomous Vehicle Disengagement Reports, 2017

"California's Autonomous Vehicle Reports are the Best in the Country, But Nowhere Good Enough," Jalopnik, February 1, 2018:

"...there's the potential for serious gaps in what even qualifies as an event that required a human to manually take control of the car. That only means the public's going to have to rely more and more on the company's word—something that became evident last year, when it emerged that federal regulators could only rely entirely on Tesla's data to conclude the automaker's Autosteer capability led to a 40 percent drop in crashes. That seems like quite a gamble when it comes to garnering the public's trust to eventually let go of the wheel entirely and sit back while the automated tech steers."

"Consumer Watchdog Warns U.S. Senate New Data Shows Self-driving Cars Cannot Drive Themselves," John Simpson, Consumer Watchdog

"FBI Warns Driverless Cars Could be Used as Lethal Weapons: Internal report sees benefits for road safety, but warns that autonomy will create greater potential for criminal 'multitasking,'"

The Guardian, July 16, 2014. Available at:

https://www.theguardian.com/technology/2014/jul/16/google-fbi-driverless-cars-leathal-weapons-autonomous

"Why Tesla's 'AutoPilot' Can't see a Stopped Firetruck,"

Wired, January 25, 2018, available at:

https://www.wired.com/story/tesla-autopilot-why-crash-radar/ :

"On Monday, a Tesla Model S slammed into the back of a stopped firetruck on the 405 freeway in Los Angeles County….How is it possible that one of the most advanced driving systems on the planet doesn't see a freaking fire truck, dead ahead?

Tesla['s]...manual does warn that the system is ill-equipped to handle this exact sort of situation: 'Traffic-Aware Cruise Control cannot detect all objects and may not brake/decelerate for stationary vehicles, especially in situations when you are driving over 50 mph (80 km/h) and a vehicle you are following moves out of your driving path and a stationary vehicle or object is in front of you instead.'

Volvo's semi-autonomous system, Pilot Assist, has the same shortcoming. Say the car in front of the Volvo changes lanes or turns off the road, leaving nothing between the Volvo and a stopped car. 'Pilot Assist will ignore the stationary vehicle and instead accelerate to the stored speed,' Volvo's manual reads, meaning the cruise speed the driver punched in. 'The driver must then intervene and apply the brakes.' In other words, your Volvo won't brake to avoid hitting a stopped car that suddenly appears up ahead. It might even accelerate towards it.

The same is true for any car currently equipped with adaptive cruise control, or automated emergency braking. It sounds like a glaring flaw, the kind of horrible mistake engineers race to eliminate. Nope. These systems are designed to ignore static obstacles because otherwise, they couldn't work at all….

Raj Rajkumar, who researches autonomous driving at Carnegie Mellon University, thinks those assumptions concern one of Tesla's key sensors. 'The radars they use are apparently meant for detecting moving objects (as typically used in adaptive cruise control systems), and seem to be not very good in detecting stationary objects,' he says."

"A Cheaper Airbag, and Takata's Road to a Deadly Crisis," the New York Times, August 26, 2016.

Consumers for Auto Reliability and Safety (CARS) comments to the California Department of Motor Vehicles, April 24, 2017

Consumers for Auto Reliability and Safety (CARS) comments to the California Department of MotorVehicles, October 26, 2017

_______________________________________________________________________________________

1 Statement of Catherine Chase, President, Advocates for Highway and Auto Safety, on "Driving Automotive Innovation and Federal Policies", Submitted to the U.S. Senate Committee on Commerce, Science, and Transportation, January 24, 2018.

2 "Want guns? Drugs? Self-driving cars may become the perfect delivery vehicle," Sacramento Bee, January 9, 2018.

3 "Why Tesla's 'AutoPilot' Can't see a Stopped Firetruck," Wired, January 25, 2018